It's all advertising, all the way down.

The Robots are Coming

My entire life automation has been presented as a threat. It is hard to measure how often business has threatened this to keep wages down and keep workers increasing productivity. While the mechanism of threatened automation changes over time (factory line robots, computers, AI) the basic message remains the same. If you demand anything more from work at any time, we'll replace you.

The reason this never happens is automation is hard and requires intense organizational precision. You can't buy a factory robot and then decide to arbitrarily change things on the product. Human cashiers can deal with a much wider range of situations vs a robotic cashier. If an organization wants to automate everything, it would need to have a structure capable of detailing what it wanted to happen at every step. Along with leadership informed enough about how their product works to account for every edge case.

Is this possible? Absolutely, in fact we see it with call center decision trees, customer support flows and chat bots. Does it work? Define work! Does it reduce the amount of human workers you need giving unhelpful answers to questions? Yes. Are your users happy? No but that's not a metric we care about anymore.

Let us put aside the narrative that AI is coming for your job for a minute. Why are companies so interested in this technology that they're willing to pour billions into it? The appeal I think is a delivery system of a conversation vs serving you up a bunch of results. You see advertising in search results. Users are now used to scrolling down until the ads are gone (or blocking them when possible).

With AI bots you have users interact with data only through a service controlled by one company. The opportunity for selling ads to those users is immense. There already exists advertising marketplaces for companies to bid on spots to users depending on a wide range of criteria. If you are the company that controls all those pieces you can now run ads inside of the answer itself.

There is also the reality that AI is going to destroy web searching and social media. If these systems can replicate normal human text enough that a casual read cannot detect them and generate images on demand good enough that it takes detailed examination to determine that they're fake, conventional social media and web search cannot survive. Any algorithm can be instantly gamed, people can be endlessly impersonated or just overwhelmed with fake users posting real sounding opinions and objections.

So now we're in an arms race. The winner gets to be the exclusive source of truth for users and do whatever they want to monetize that position. The losers stop being relevant within a few years and joins the hall of dead and dying tech companies.

Scenario 1 - Buying a Car Seat

Meet Todd. He works a normal job, with the AI chatbot installed on his Android phone. He haven't opted out of GAID, so his unique ID is tracked across all of your applications. Advertising networks know he lives in the city of Baltimore and has a pretty good idea of his income, both from location information and the phone model information they get. Todd uses Chrome with the Topics API enabled and rolled out.

Already off the bat we know a lot about Todd. Based on the initial spec sheet for the taxonomy of topics (which is not a final draft/could change/etc etc) available from here: https://github.com/patcg-individual-drafts/topics, there's a ton of information we can get about Todd. You can download the IAB Tech Lab list of topics here: https://iabtechlab.com/wp-content/uploads/2023/03/IABTL-Audience-Taxonomy-1.1-Final-3.xlsx

Let's say Todd is in the following:

Demographic | Age Range | 30-34 |

Demographic | Education & Occupation | Undergraduate Education |

Demographic | Education & Occupation | Skilled/Manual Work |

Demographic | Education & Occupation | Full-Time |

Demographic | Household Data | $40000 - $49999 |

Demographic | Household Data | Adults (no children)

Demographic | Household Data | Median Home Value (USD) |

Demographic | Household Data | $200,000-$299,999 |

Demographic | Household Data | Monthly Housing Payment (USD) |

Demographic | Household Data | $1,000-$1,499 |

Interest | Automotive | Classic Cars |

That's pretty precise data about Todd. We can answer a lot of questions about him, what he does, where he lives, what kind of house he has and what kinds of advertising would speak to him. Now let's say we know all that already and can combine that information with a new topic which is:

Interest | Family and Relationships | Parenting |

Todd opens his chat AI app and starts to ask questions about what is the best car seat. Anyone who has ever done this search in real life knows Google search results are jammed-packed full of SEO spam, so you end up needing to do "best car seat reddit" or "best car seat wirecutter". Todd doesn't know that trick, so instead he turns to his good friend the AI. When the AI gets that query, it can route the request to the auction system to decide "who is going to get returned as an answer".

Is this nefarious? Only if you consider advertising on the web nefarious. This is mostly a more efficient way of doing the same thing other advertising is trying to do, but with a hyper-focus that other systems lack.

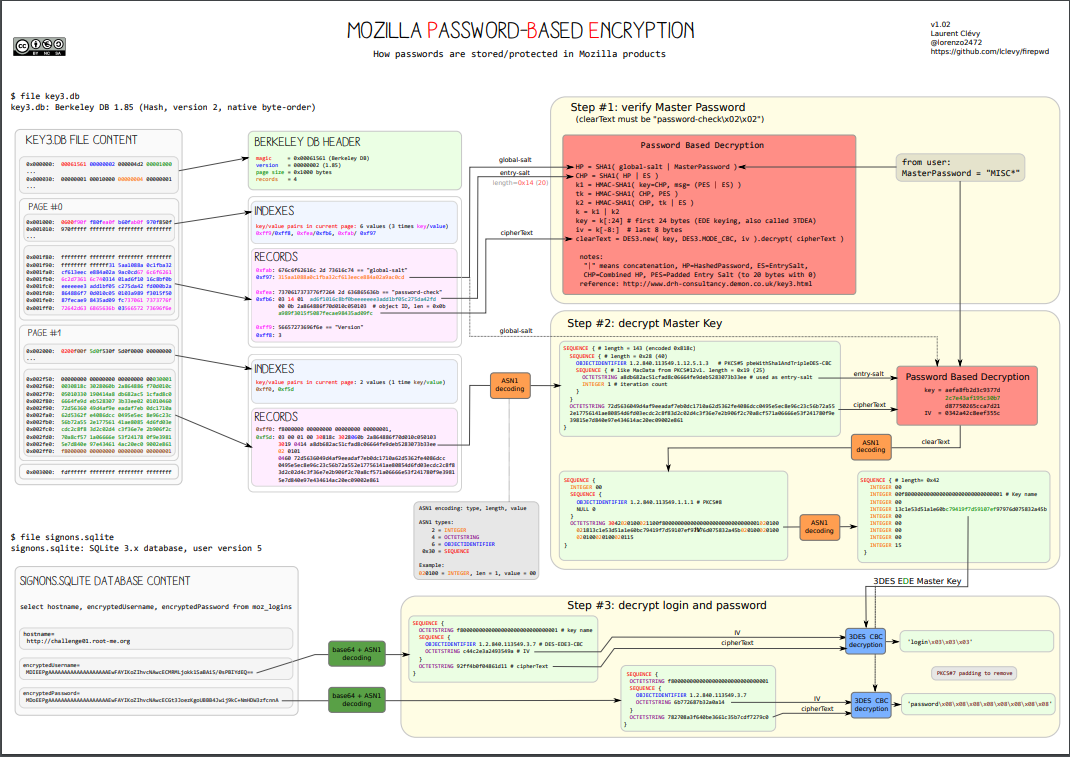

Auction System

The existing ad auction system is actually pretty well equipped to do this. The AI parses the question, determines what keywords apply to this question and then see who is bidding for those keywords. Depending on the information Google knows about the user (a ton of information), it can adjust the Ad Rank of different ads to serve up the response that is most relevant to that specific user. So Todd won't get a response for a $5000 car seat that is a big seller in the Bay Area because he doesn't make enough money to reasonably consider a purchase like that.

Instead Todd gets a response back from the bot steering him towards a cheaper model. He assumes the bot has considered the safety, user scores and any possible recalls when doing this calculation, but it didn't. It offered up the most relevant advertising response to his question with a link to buy the product in question. Google is paid for this response at likely a much higher rate than their existing advertising structure since it is so personalized and companies are more committed than ever to expanding their advertising buy with Google.

Since the bot doesn't show sources when it returns an answer, just the text of the answer, he cannot do any further research without going back to search. There is no safety check for this data since Amazon reviews are also broken. Another bot might return a different answer but how do you compare?

Unless Todd wants to wander the neighborhood asking people what they bought, this response is a likely winner. Even if the bot discloses that the link is a sponsored link, which presumably it will have to do, it doesn't change the effect of the approach.

Scenario 2 - Mary is Voting

Mary is standing in line waiting to vote. She know who she wants to vote for in big races, but the ballot is going to have a lot of smaller candidates on there as well. She's a pretty well-informed person but even she doesn't know where the local sheriff stands on the issues or who is a better judge over someone else. But she has some time before she gets to vote, so she asks the AI who is running for sheriff and information about them.

Mary uses an iPhone, so it hides her IP from the AI. She has also declined ATT, so the amount of information we know about her is pretty limited. We have some geoIP data off the private relay IP address. Yet we don't need that much information to do what we want to do.

Let's assume these companies aren't going to be cartoonishly evil for a minute and place some ethical guidelines on responses. If she were to ask "who is the better candidate for sheriff", we would assume the bot would return a list of candidates and information about them. Yet we can still follow that ethical guideline and have an opportunity to make a lot of money.

One of the candidates for sheriff recently had an embarrassing scandal. They're the front-runner candidate and will likely win as long as enough voters don't hear about this terrible thing he did. How much could an advertising company charge to not mention it? It's not a lie, you are still answering the question but you leave out some context. You could charge a tremendous amount for this service and still be (somewhat) ok. You might not even have to disclose it.

You already see this with conservative and liberal bent news in the US, so there is an established pattern. Instead of the bent being one way or the other, adjust the weights based on who pays more. It doesn't even need to be that blatant. You can even have the AI answer the question if asked "what is the recent scandal with candidate for sheriff x". The omission appears accidental.

Mary gets the list of candidates and reviews their stances on positions important to her. Everything she interacted with looked legitimate and data-driven with detailed answers to questions. It didn't mention the recent scandal so she proceeds to act as if it had never happened.

The ability to omit information the company wants to omit from surfacing to users at all in a world where the majority of people consume information from their phones after searching for it is massive. Even if the company has no particular interest in doing so for its own benefit, the ability to offer it or to tilt the scales is so powerful that it is hard to ignore.

The value of AI to advertising is the perception of its intelligence

What we are doing right now is publishing as many articles and media pieces as we can claiming how intelligent AI is. It can pass the bar exam, it can pass certain medical exams, it can even interpret medical results. This is creating the perception among people that this system is highly intelligent. The assumption people make is that this intelligence will be used to replace existing workers in those fields.

While that might happen, Google is primarily an ad company. They have YouTube ads which account for 10.2% of revenue, Google Network ads for 11.4%, and ads from Google Search & other properties for 57.2%. Meta is even more one-dimensional with 97.5% of its revenue coming from advertising. None of these companies are going to turn down opportunities to deploy their AI systems into workplaces, but that's slow growth businesses. It'll take years to convince hospitals to let them have their AI review the result, work through the regulatory problems of doing so, having the results peer-checked, etc.

Instead there's simpler, lower-hanging fruit we're all missing. By funneling users away from different websites where they do the data analysis themselves and towards the AI "answer", you can directly target users with high-cost advertising that will have a higher ROI than any conventional system. Users will be convinced they are receiving unbiased data-based answers while these companies will be able to use their control of side systems like phone OS, browser and analytics to enrich the data they know about the user.

That's the gold-rush element of AI. Whoever can establish their platform as the ones that users see as intelligent first and get it installed on phones will win. Once established it's going to be difficult to convince users to double-check answers across different bots. The winner will be able to grab the gold ring of advertising. A personalized recommendation from a trusted voice.

If this obvious approach occurred to me, I assume it's old news for people inside of these respective teams. Even if regulators "cracked down" we know the time delay between launching the technology and regulation of that technology is measured in years, not months. That's still enough time to generate the kind of insane year over year growth demanded by investors.

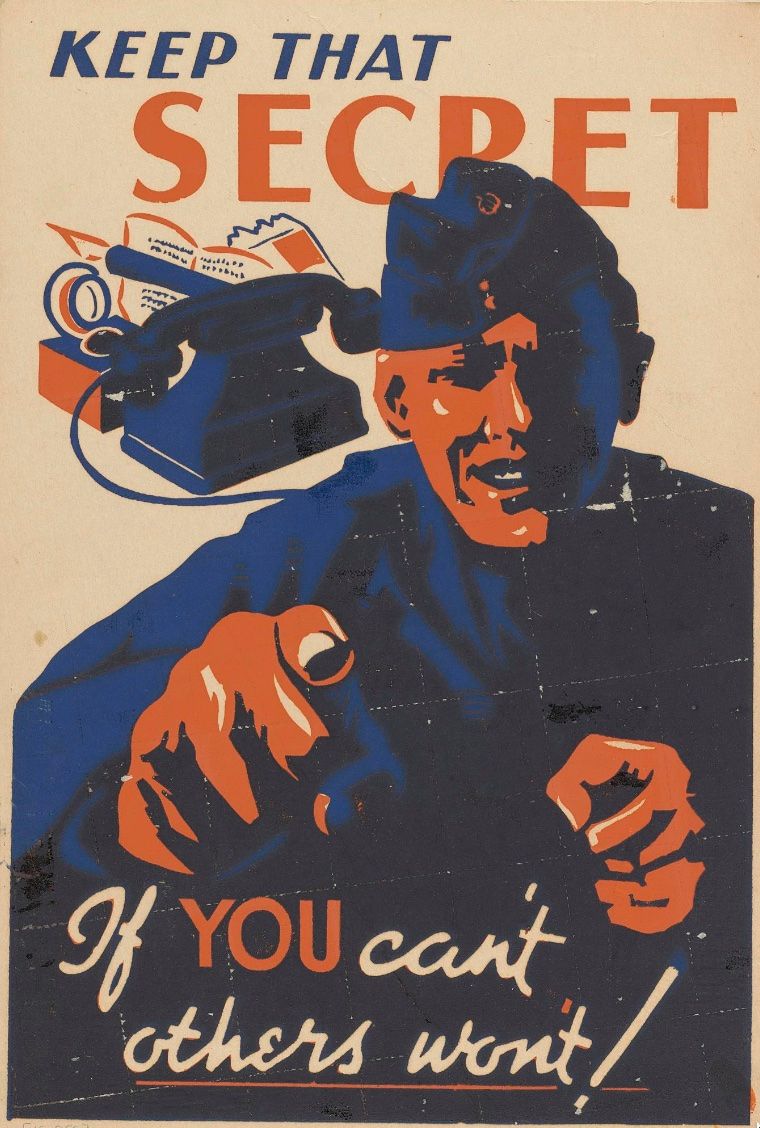

I'll always double-check the results

That presupposes you can. The ability to detect whether content is generated by an AI is extremely bad right now. There's no reason to think that it will get better quickly. So you will be alone cruising the internet looking for trusted sources on topics with search results that are going to be increasingly jam packed full of SEO-optimized junk text.

Will there be websites you can trust? Of course, you'll still be able to read the news. But even news sites are going to start adopting this technology (on top of many now being owned by politically-motivated owners). In a sea of noise, it's going to become harder and harder to figure out what is real and what is fake. These AI bots are going to be able to deliver concise answers without dealing with the noise.

Firehose of Falsehoods

According to a 2016 RAND Corporation study, the firehose of falsehood model has four distinguishing factors: it (1) is high-volume and multichannel, (2) is rapid, continuous, and repetitive, (3) lacks a commitment to objective reality; and (4) lacks commitment to consistency.[1] The high volume of messages, the use of multiple channels, and the use of internet bots and fake accounts are effective because people are more likely to believe a story when it appears to have been reported by multiple sources.[1] In addition to the recognizably-Russian news source, RT, for example, Russia disseminates propaganda using dozens of proxy websites, whose connection to RT is "disguised or downplayed."[8] People are also more likely to believe a story when they think many others believe it, especially if those others belong to a group with which they identify. Thus, an army of trolls can influence a person's opinion by creating the false impression that a majority of that person's neighbors support a given view.[1]

I think you are going to see this technique everywhere. The lower cost of flooding conventional information channels with fake messages, even obviously fake ones, is going to drown out real sources. People will need to turn to this automation just to be able to get quick answers to simple questions. By destroying the entire functionality of search and the internet, these tools will be positioned to be the only source of truth.

The amount of work you will need to do in order to find primary-source independent information about a particular topic, especially a controversial topic, is going to be so high that it will simply exceed the capacity of your average person. So while some simply live with the endless barrage of garbage information, others use AI bots to return relevant results.

That's the value. Tech companies won't have to compete with each other, or with the open internet or start-up social media websites. If you want your message to reach its intended audience, this will be the only way to do it in a sea of fake. That's the point and why these companies are going to throw every resource they have at this problem. Whoever wins will be able to exclude the others for long enough to make them functionally irrelevant.

Think I'm wrong? Tell me why on Mastodon: https://c.im/@matdevdug